How Intelligent Is C-3PO Really? An Introduction to the Ladder of Causation

Posted February 21, 2023 by Dustin Frederik Rusteberg and Paul Hünermund ‐ 10 min read

What can an age-old science fiction flick tell us about the quest for general AI? Explaining the ladder of causation at the example of C-3PO.

“Don’t worry about Master Luke. I’m sure he’ll be all right. He’s quite clever, you know… for a human being” – C-3PO, Star Wars Episode V

Introduction

This is one of the iconic quotes from George Lucas’ character C-3PO in his 1980 movie “Star Wars- Episode V: The Empire Strikes Back”. In this quote, the protocol droid indicates that in a galaxy far, far, away droids believe to be more intelligent than humans. However, according to Judea Pearl in his “Book of Why”, to achieve true, human-like intelligence, artificial intelligence (AI) must first conquer the ladder of causation, a three-step ladder in which each step represents an increased level of causal thinking. Despite its impressive pattern recognition capabilities, contemporary AI has not yet achieved this milestone. If droids are to be considered superior to humans in the Star Wars universe, this leaves the question whether they show traces of the highest level of causal reasoning: counterfactual thinking.

Seeing, Doing and Imagining

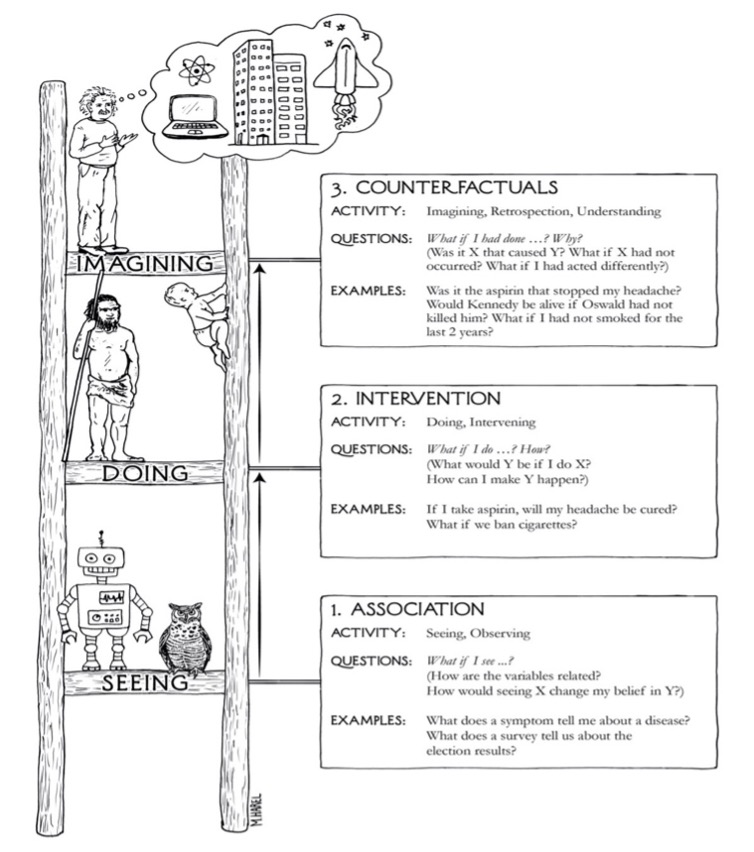

The intelligence of standard AI is exclusively based on predictions derived from passive observations. This means that we are very good at teaching a machine to calculate the probability P of a variable A given that we see B occurring (written in mathematical terms as P(A|B)). Thereby, it is not so much the machine’s intelligence that is impressive, but rather the number of problems that can be tackled by this kind of ‘curve-fitting’ (Pearl, 2018). Nevertheless, the methods applied by contemporary machines remain on the first rung of the ladder of causation because they exclusively rely on observing data and associating variables. Unfortunately, this only allows for a limited range of questions that answer queries of the kind: “What if I see …?”.

When we are trying to answer causal questions, we need to step up one level on the ladder of causation. The second rung is all about intervening and doing. Intervention refers to changing the as-is status and is categorically different from association. Critically, intervention changes the data generating process. This sounds confusing, so why is it important? Essentially, it means that P(A|B) is not the same as P(A|do(B)) – the probability of A given that B occurs is not the same as the probability of A given that we do B. To solve such intervention questions, we need to rely on a different kind of input knowledge; one that cannot be easily found in the data alone. Consider this case: In European countries the stork population is highly associated with the birth rate (Matthews, 2000). To most of the readers, the senselessness of this correlation will be obvious. However, to prevent a potential AI-chancellor from mass-breeding storks to increase the number of taxpayers, we need to enable the AI to think on an intervention instead of an association basis. This level permits the formulation of causal queries like “What if I do…?” or “How can I make Y happen?”. The ability to ask and answer these questions takes us an important step closer to human-like intelligence.

Despite that, intervention does not constitute the last level of the ladder of causation. To finally ascend to this step, AI needs the ability to comprehend why an error took place and what corrective steps are available to prevent the mistake from happening in the future. To learn from mistakes and the experiences of others, it must be able to imagine potential realities that did not occur. More precisely, it requires the ability to ask and understand questions like “What would have happened if I had done things differently?” or, “Was it really X that caused Y?”. In essence, the final step of the ladder of causation is about asking and answering counterfactual questions.

So, if droids in Star Wars could possibly have achieved human-like intelligence, they should exhibit counterfactual thinking. To figure out whether that’s the case, let us take a closer look at two of C-3PO’s statements from the fifth episode of the Star Wars series. Quote 1:

“Sir, the possibility of successfully navigating an asteroid field is approximately 3,720 to 1.”

This quote is probably one of the most heavily discussed statements of the entire Star Wars universe. It has caught the interest of math professors (Knudsen, 2017), PhD students (Marshall, 2015), and fans on platforms like Reddit and StackExchange alike. The quote occurs in a situation where the crew of the Falcon, Han Solo’s infamous star ship, has only barely dodged three Star Destroyers, because of a hyperdrive malfunction, but is still being chased by imperial star ships. To make things more complicated, the Falcon is accidentally heading into an asteroid field. The most frequent explanations for how C-3PO came up with this specific probability are 1) a frequentist approach, 2) the application of Bayesian networks, or 3) familiarity with the actuarial tables used by the various insurance agencies operating in the galaxy. These explanations are very amusing and have in common that they are methods coherent with the first rung of the ladder of causation. Hence, with advanced mathematics and computing power it should be easy to create an AI that recognizes its environments and calculates survival chances. After all, similar things are already performed by contemporary vacuum cleaner robots.

Let us assume that C-3PO – being a machine – does not fall into panic but is able to carry out calculations at his normal computing power, even under stress. We can, thus, analyse how C-3PO might have argued were he able to think in coherence with rung three of the causal ladder. In that case, he might have asked himself the counterfactual question “What would happen if we were to avoid steering through the asteroid field?”. The answer to that question apparently is: They would have had to face off three Star Destroyers as they are not able to escape them with light speed. Later in the movie, C-3PO gets interrupted while trying to state the survival chances of attacking a Star Destroyer. Although C-3PO does not finish his sentence, my best guess is that they are bad. Hence, facing off three of them should be equally complicated. The important thing to observe is that rung one knowledge for both options is useless for decision-making in this case. Associating chances of survival with flying through an asteroid field are, to no one’s surprise, bad. The same applies to facing off star destroyers – thanks for nothing C-3PO.

Comparing the probabilities of death between facing three Star Destroyers and steering through an asteroid field, would be the more logical choice. Such a comparison requires counterfactual thinking though. A comparison, that is done by Han Solo instead, who decides to take his chances in the asteroid field. Although not knowing the exact numbers, a comparison might have led C-3PO to suggest steering through the asteroid field by calculating the chances of survival of flying through an asteroid field relative to those facing off star destroyers. Instead, C-3PO chose to state the obvious.

On a side note, overturning an AIs recommendation, as Han Solo did in this scene, and the interplay between human decision makers and AI in more general, is a hotly discussed topic in AI research (see for example van den Broek et al., 2022; Seidel et al., 2019, Lindenbaum et al., 2019). Traditional information technologies, like intranets, expert systems or virtual networks, mainly augmented and contributed to knowledge work. Machine learning systems, by contrast, themselves create knowledge more or less independently, while possibly mitigating human biases, inefficiencies, and path-dependencies. Here, van den Broek et al. (2022) argue that a tension develops between independence, which refers to the production of knowledge without needing to rely on domain experts, and relevance, defined as producing knowledge which is useful to a domain. Coming back to our example at hand, C-3PO independently created knowledge from data he had access to, Great! Did that help in the decision-making process? Not at all. Han Solo, our domain expert, overturned C-3PO’s advice because it was not relevant, and, for all we know, rightfully so. In the study mentioned above, the researchers find that developers and domain experts manage the tension by selectively including and excluding their expertise in a process described as mutual learning. In other words, a hybrid practice emerges, consisting of a combination of applied machine learning and domain expertise (van den Broek, 2022). The framework presented in the paper highlights three situations in which errors occur frequently on the side of the AI, meaning that domain experts or developers need to include their specific knowledge over the machine’s recommendation. The first problem is called the data selection problem. Data selection problems appear when the AI has difficulties selecting the correct data to learn from. Secondly, framing problems occur when the machine is constrained by the algorithm’s functions and available data. Lastly, a mismatch problem arises when the AI fails to match its operations to human reasoning.

Embedding our newly acquired knowledge to our example, one might argue that Han Solo reflected on C-3POs knowledge production capabilities and might have concluded that a mismatch problem had occurred, due to C-3PO not thinking counterfactually. Hence, Han Solo reverted to his domain expertise to make the decision. Realistically speaking, this is probably not the story the movie tries to tell. If at all, Han Solo most likely reverted to his domain expertise in gambling.

Returning to the initial question, one might ask whether C-3PO’s obsession with stating probabilistic facts, which he exhibits multiple times during the movies, indicates that droids are stuck at rung one of the ladder of causation even in the realm Star Wars? Let’s have a look at the second quote. Quote 2:

“If only you’d attached my legs, I wouldn’t be in this ridiculous position.”

This statement is made when C-3PO is being carried by Chewbacca while they are approaching the chamber in which Han Solo is supposed to be hibernated momentarily. Prior to that, C-3PO had been shot and partly destroyed during an exploration of the mining operation called “Nomad City”. In prison, Chewbacca prioritized reattaching C-3POs head and arms. From this quote, it is evident that C-3PO imagines a reality in which he has the freedom to move for himself and witness the events that are about to occur. Besides C-3PO seemingly being able to feel the emotion of shame, the quote suggests that C-3PO possesses the ability to think in counterfactual states as humans do.

What is concerning is the accuracy of the counterfactual state he imagines from a human standpoint. With his legs attached, he believes that his position improves. However, everyone witnessed that they provide him with very limited flexibility and speed. Being strapped to the back of the more mobile Chewbacca probably increases his chances of survival considerably and, thus, his position. It seems like C-3PO is much more concerned with his social status than his health, something that could still be in character, considering he was programmed for etiquette and customs.

Concluding Remarks

Answering this article’s question, the second quote suggests that C-3PO has conquered the ladder of causation and has achieved somewhat human-like intelligence. Showcasing the ability to think in counterfactuals indicates that he has capabilities that surpass those of contemporary AI. Does that mean that George Lucas considered the ladder of causation when producing Star Wars? The answer is no. Firstly, putting the quotes into context, they were probably included in the movie to increase tension, help the character development of Han Solo, and cause comedic relief. In fact, an argument can be made that counterfactual thinking is an essential part of comedy and satire, as it constructs alternative scenarios that crash with reality in a surprising and humoristic form. The fact that the comic counterfactual was recently introduced as a term to the lexicon of rhetorical studies underlines this notion nicely (Waisanen, 2018). Secondly, Bayesian networks and advanced calculation power were believed to be the keys to human-like AI in the 1980s, with Judea Pearl formerly being an advocate of this idea. Maybe C-3PO’s behaviour, as exhibited in the first quote, is how people of the time imagined intelligent machines to be like: immense computing power to solve complex calculations. Conversely, nowadays causal data science lets us understand that it is C-3PO’s ability to imagine a fictional world in which he has the freedom to move for himself that is in fact the much more crucial ability.