Using Causal Inference and Experimentation to Improve our Referral Program

Posted August 28, 2022 by Ben Tengelsen ‐ 8 min read

In this post, Ben Tengelsen from the IntelyCare Research Group explains how Bayesian structural time-series models and experimentation helped to improve a referral program.

Introduction

Like most companies, IntelyCare loves customer referrals. We’re a 2-sided, gig-style marketplace matching nursing professionals with open shifts at nursing homes, and as such we have a round-the-clock recruiting operation in over 30 states. Nursing professionals come to our platform from a variety of sources, but those who come via referrals tend to stay on our platform for longer and maintain a higher level of engagement.

A while ago we ran a large-scale experiment aimed at increasing our referral volume. Our experiment were motivated in part by concepts in behavioral economics, specifically something called present bias. People exhibit present bias when they prefer smaller rewards right away over larger rewards far in the future. Our question was whether present bias was sufficiently present among IntelyPros (the nursing professionals working for us) such that small, immediate rewards for referrals would outperform a standard referral incentive program where payouts were delayed. By our estimates, the small and immediate bonuses generated an 80% increase in referrals while the larger bonus generated an increase of only 65%. Even with more referrals, the total amount spent on incentives was 36% less for the small bonus group than the group with larger bonuses.

Background on Referrals at IntelyCare

Our referral experience is pretty standard. Users have a referral link in their app and can share it with friends. When someone applies using a referral link, their application is tagged so we know who referred them.

Through 2019, this feature generated a steady number of referrals without any incentives in place. Then in early 2020 covid caused a huge increase in demand for nursing services everywhere. We needed to quickly hire as many nursing professionals as possible. Referral bonuses are common in many industries and pervasive in the gig-economy. It seemed natural that financial incentives could help us increase referrals and in turn the number of nursing professionals working on our platform.

The Experiment

Seeing this as a rare blank slate, we decided to test two distinct kinds of incentives. We randomly assigned ~8,000 IntelyPros to one of three experiences:

- Referrer receives $ 100 when their referral completes their 1st shift

- Referrer receives an extra $ 1/hour on their next shift when their referral submits an application at IntelyCare.com

- Status quo - no incentives in place (control group)

The $ 100 offer is a common incentive structure for referrals. It’s easy to explain and implement which is probably why it’s popular. The $ 1/hr offer is less common but makes sense for our business. We’re in a highly regulated space and our IntelyPros operate as employees rather than independent contractors. As such we have a longer-than-usual application process for a gig-economy type job. The median time from when a nurse starts their application to when they complete their first shift is between 2 and 3 months. That’s a long time to wait for a bonus!

While the payout for a successful referral in the $ 1/hr offer is considerably less, it’s also much easier to get than the $ 100 bonus. Applying to work at IntelyCare takes 2 minutes. A nurse could refer their friend and see the resulting rate increase moments later. And even though the referring nurse still needs to complete their next shift to claim the bonus, they still receive the gratification of seeing their pay increase in the app right away.

We ran the experiment for about 2 months. Ideally, we would have continued for longer but we made an unfortunate promise to our marketing team that they would eventually have their lives back. To minimize spillover between the groups, the promotions were communicated directly to each nurse via email and SMS. Reminders were sent periodically throughout the duration of the experiment.

The Raw Results

We waited several months after the experiment ended to allow referred applicants to finish working their way through the application process. When the dust finally settled, the results looked like this.

Table 1: Referral Experiment Results

| Number of Referrals | Number of Referring Employees | Converted Referrals (completed a shift) | Total Bonus Expense | |

|---|---|---|---|---|

| Control Group | 93 | 82 | 13 | $0 |

| $ 100 Group | 108 | 46 | 18 | $1800 |

| $ 1/hr Group | 165 | 55 | 23 | $1320 |

That’s a solid impact, right? The $ 100 group has a 16% jump on the control group for referrals received and a 38% increase for converted referrals. The $ 1/hr group is even more impressive with a 77% increase in both referrals received and converted referrals compared to the control group. If we use the $ 100 bonus as our benchmark, the $ 1/hr bonus gives us a 53% increase in referrals received and a 28% increase in converted referrals while spending 36% less.

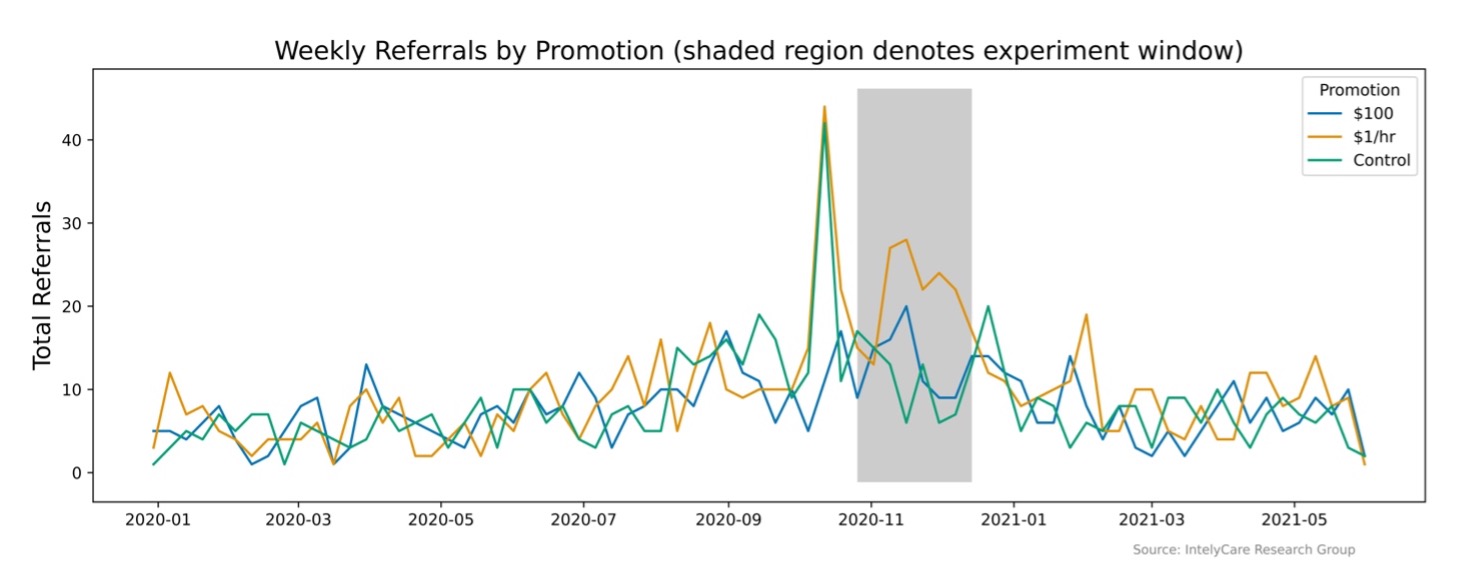

The fact that our groups were randomly selected should make measuring causal impact straightforward. We may even be tempted look at these tables and call it good. If we are confident the groups are similar and the experiment was executed well, the results from the control group should be an acceptable counterfactual. While ~2600 people in each group seems large enough, the number of people providing referrals is a small fraction of each group. With such a small number of people giving referrals, week-to-week fluctuations in referral counts can be substantial. Case in point, check out the weeks immediately preceding the experiment.

Shortly before the experiment launched, the control group and the $ 1/hr group had a crazy number of referrals! We lost our minds over this for a while, but after looking at every reasonable explanation we determined it was a fluke. To us, this highlighted the need for a model to account for seasonality and to quantify the uncertainty around our results.

Model-based Counterfactuals

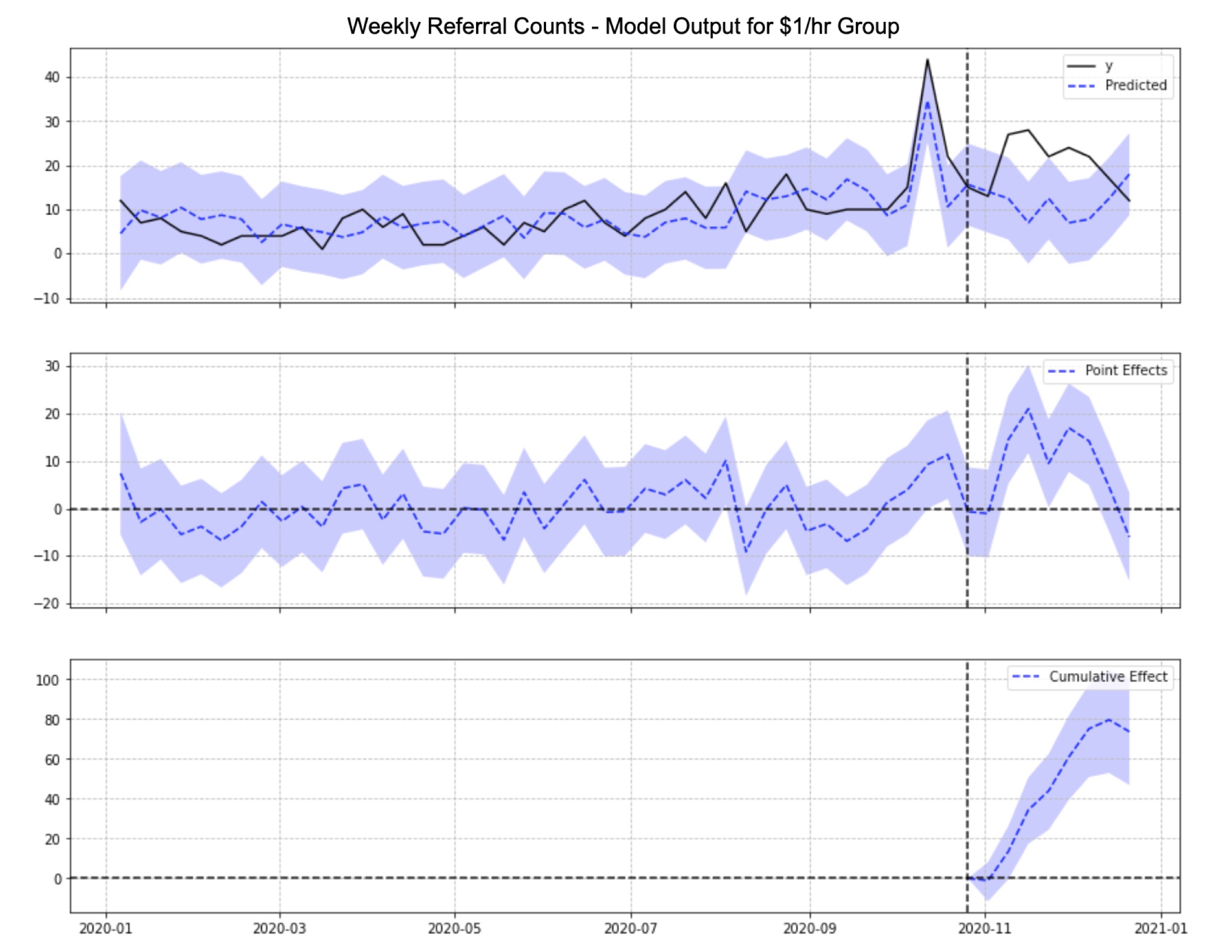

We used many models to validate our results, but for the sake of this post we’ll describe our findings from a Bayesian structural time-series model (aka Google’s CausalImpact library). This model estimates a counterfactual for each treatment group using the control group as a covariate. For data we use weekly counts of referrals in each of the groups as shown in the previous figure. The model output gives us estimates on the causal impact in addition to the following charts

Above is the model output for the $ 1/hr group. The top panel shows the raw data (“y”) alongside our model-generated counterfactual (“predicted”). The middle panel shows the week-by-week difference between the observed and counterfactual lines. The final panel shows the cumulative difference between the observed and counterfactual lines during the experiment (indicated by the vertical dashed line). This cumulative effect is our primary metric of interest.

We do the same for our $ 100 group and our control group. Including the control group is mostly a sanity check – we want to make sure the estimated causal impact for the control group is zero. It also helps us understand how much of the perceived impact of our treatments is due to volatility in the control group. All together our results give us the following table.

Table 2: Experiment Results – Model Estimates (standard errors in parentheses)

| Control Group | $100 Group | $1/hr Group | |

|---|---|---|---|

| Total Referrals | 93 | 108 | 165 |

| Counterfactual | 69.2 (20.6) | 65.7 (11.0) | 91.3 (14.9) |

| Absolute Effect [Total Referrals – Counterfactual] | 23.9 (20.6) | 42.3 (11.0) | 73.7 (14.9) |

| Relative Effect [Total Referrals/Counterfactual) | 34.5% (29.8%) | 64.5% (16.8%) | 80.8% (16.3%) |

| Estimated Incremental Working IntelyPros [1 IntelyPro per 6 referrals] | 0 * | 7 | 12 |

| Total Cost | $0 | $1800 | $1320 |

| Cost per Incremental Working IntelyPro | - | $257 | $110 |

* Note the standard errors are nearly as big as the point estimate itself. We cannot reject a null hypothesis that the absolute effect is zero.

These model-based results differ from our raw results in some interesting ways. Most notably, the estimated counterfactuals for the control and $ 100 group are 69 and 65 referrals respectively while the $1/hr group is estimated to have generated 91 referrals in the absence of any promotion. If we use the raw referral counts from the control group as our counterfactual, we would underestimate the impact of the $ 100 bonus by a large amount.

Finally, since referrals alone do not generate revenue it’s important to map the impact of our experiment in terms of incremental working IntelyPros. Fortunately, the conversion rate of referrals to working IntelyPros is similar across treatment groups and over time. This allows us to back out the increase in working IntelyPros from the increase in referrals and understand the amount we spent for each new working IntelyPro. Between the higher number of referrals and a smaller total cost, the cost per incremental IntelyPro for the $1/hr group is less than half of what we see for the $ 100 group.

Conclusion

Whenever we’ve shared these results with colleagues, many of them are surprised. A $ 100 bonus seems so much bigger than a $ 1/hr bonus, how could a $ 1/hr bonus be more effective? Present bias! If you spin the wheel and get a reward right away, you’ll spin the wheel again. If you are required to wait a long time before receiving a reward, then spinning the wheel is suddenly not so cool. When it comes to incentives, timing and presentation often matter more than the amount of the bonus. How can you find an incentive scheme that works for your business or situation? There are so many approaches to try and every business is different. In our experience there is nothing better than test, test, test.

When we design our tests, we aspire to generate data so clean that the results are obvious without any fancy statistics. It usually doesn’t work out quite so well, and even when it does it can still be helpful to use a model to quantify impact if only to get a quick read on p-values. In our example, our model also allowed us to smooth out seasonal trends and volatile weekly aggregates that would have biased our results.